Bullhorn #2

The Bullhorn

A Newsletter for the Ansible Developer Community

Welcome to The Bullhorn, our newsletter for the Ansible developer community. If you have any questions or content you’d like to share, please reach out to us at the-bullhorn@redhat.com.

THE AWX PROJECT RELEASES 11.2.0

On April 29th, the AWX team released the newest version of AWX, 11.2.0. Notable changes include the use of collection-based plugins by default for versions of Ansible 2.9 and greater, the new ability to monitor stdout in the CLI for running jobs and workflow jobs, enhancements to the Hashicorp Vault credential plugin, and several bugfixes. Read Rebeccah Hunter’s announcement here. For more frequent AWX updates, you can join the AWX project mailing list.

ANSIBLE 101 WITH JEFF GEERLING: EPISODE 7

In the latest video of his Ansible 101 series, Jeff Geerling explores Ansible Galaxy, ansible-lint, Molecule, and testing Ansible roles and playbooks based on content in his bestselling Ansible book, Ansible for DevOps. You can find the full channel of his Ansible videos here.

POLL: DATES FOR NEXT ANSIBLE CONTRIBUTOR SUMMIT

We’re planning our next full-day virtual Ansible Contributor Summit sometime in late June or early July, and we’re looking for feedback on proposed dates. Carol Chen has posted a Doodle poll with options for possible dates; if you’re interested in attending, please fill out the poll so that we know which dates are best for everyone. We will close the poll on Friday, May 22nd.

PROOF OF CONCEPT: SERVERLESS ANSIBLE WITH KNATIVE

William Oliveira has built a new proof-of-concept for using Ansible and Knative to create event-driven playbooks. The proof-of-concept is a web application that can execute playbooks on demand and send those events to a Knative Event Broker to trigger other applications. Find the source code on GitHub here.

COMMUNITY METRIC HIGHLIGHT: COMMUNITY.GENERAL COLLECTION

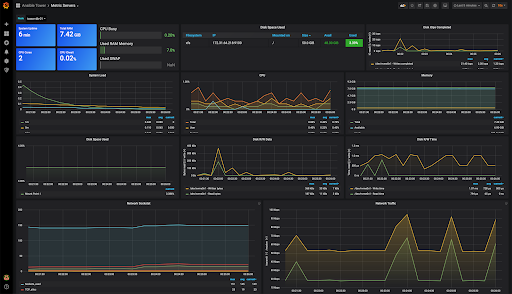

As the Ansible community continues to grow, we rely increasingly on metrics to keep track of our progress towards our goals. Following on from the more general metrics in Issue 1, we've also started to think about what special handling we might need in addition to metrics for all collections. Community.general is one such special case — as the home for most modules in Ansible, it sees a lot of contributors come and go, and content there can run the risk of becoming unmaintained. We need to watch this repository carefully so that we can act as needed.

Below is one graph from the dashboard we're preparing on this. This graph shows the rate of opened and closed issues per week, as well as a simple statistical test to see if the slope is non-zero. Currently both are essentially flat, suggesting opening and closing of issues is balanced. We've already got several graphs on this report, and we'll be adding more in the future.

ANSIBLE VIRTUAL MEETUPS

The following virtual meetups are being held in the Ansible community over the next month:

Ansible Minneapolis: CyberArk’s integration with Ansible Automation

Thu, May 21 · 6:30 PM CDT

https://www.meetup.com/Ansible-Minneapolis/events/sbqkgrybchbcc/

Ansible Northern Virginia: Spring Soiree!

Thu, May 21 · 4:00 PM EDT

https://www.meetup.com/Ansible-NOVA/events/270368639/

Ansible Paris: Webinar #1

Thu, May 14 · 11:00 AM GMT+2

https://www.meetup.com/Ansible-Paris/events/270584272/

We are planning more virtual meetups to reach a broader audience, and we want to hear from you! Have you started using Ansible recently, or are you a long-time user? How has Ansible improved your workflow or scaled up your automation? What are some of the challenges you’ve faced and lessons learned? Share your experience by presenting at a Virtual Ansible Meetup: https://forms.gle/aG5zpVkXDVMHLERX9

You’ll have the option to pre-record the presentation, and be available during the meetup for live Q&A, or deliver the presentation live. We will work with you on the optimal set up, and share with you some cool Ansible swag!

FEEDBACK

Have any questions you’d like to ask, or issues you’d like to see covered? Please send us an email to the-bullhorn@redhat.com.